comp_viz_ml5

XR/AR+ Machine Learning

This is a colletion of projects and experiments combining Augmented Reality (AR/XR) and Machine Learning for graphic rendering and detection and tracking of motion, image, hands, facemesh mapping, gesture recornition and more. All projects are coded in JavaScript and play in modern browsers using the following JS libraries:

AR App with Video and Image Marker

The image with the red circles is augmented with a layer video. Load the app link on your smart phone scanning the QR code or tapping the link and then scan the image.

You will see a plane with a video and sound floating on top of the image. You may also open this link in you desktop and show the red image with you phone. Tap ‘Start AR’ button.

Interactive 3D animation AR with hand pose recognition using ML

App that places an animated 3D model on an image target and recognizes hand poses using tensorflow.js; when a handpose for the user is recognized it triggers different behaviors in the animated 3D model: wave, jump, thumbs up and die!!

Load marker image in your phone and load the app in the browser.

AR+ MediaPipe Face Mesh + AI generated mask:

I tested today a masks filter generated by an AI as a texture for a facemesh detection anchor and estimating 468 3D face landmarks in real-time even on mobile devices. It employs machine learning (ML) to infer the 3D facial surface, requiring only a single camera input without the need for a dedicated depth sensor. I used a png as a mask with the template provided by Spark AR. I used the MindAR library and Three.js. That means that any facial movements are also tracked and mapped.

See AI generated image here with the natural language prompt ‘wolfram rule 30 colorful mask’

AR with 3D models

Having some fun developing XR for the browser…select your 3D hats, glasses and…earnings.

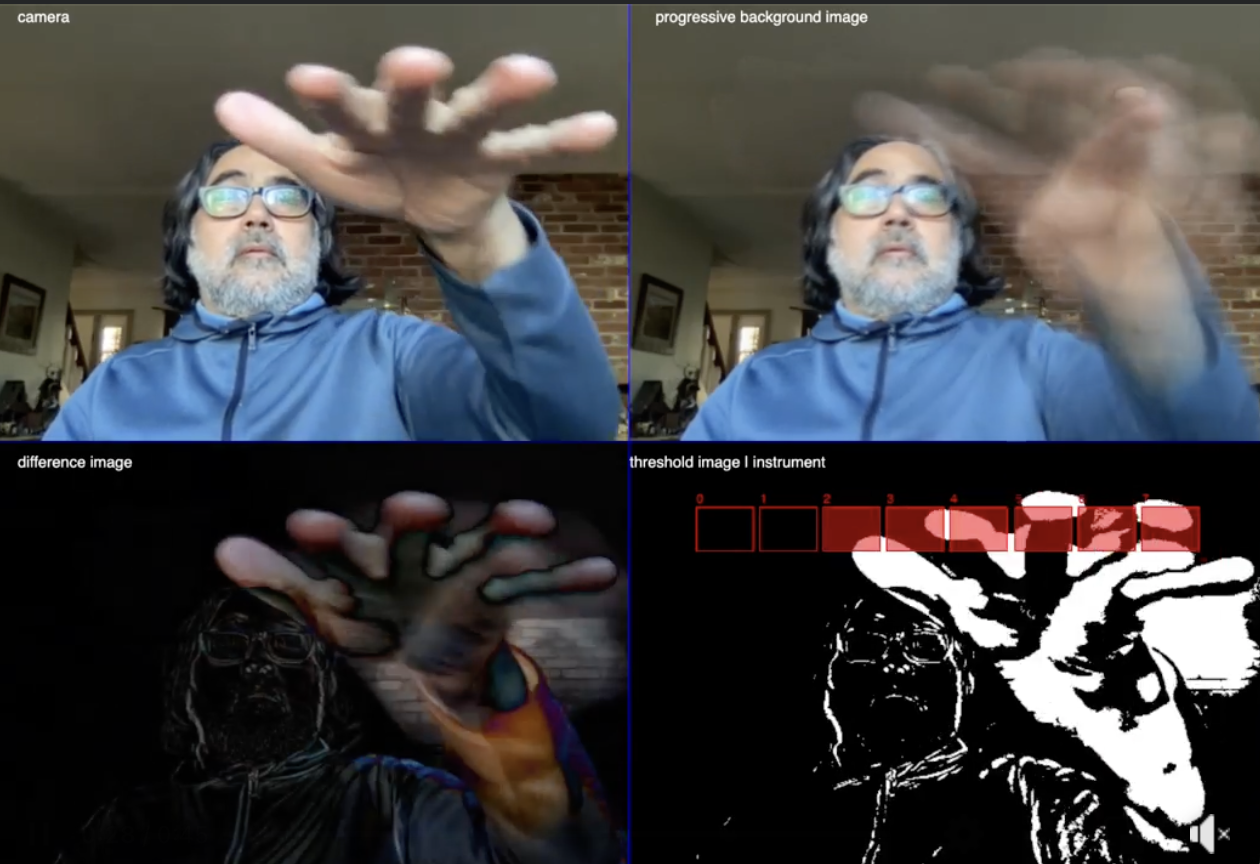

Video Hot Spots |

Recreating interactive triggering of sounds I used to do in MaxMspJitter (2003) using now JavaScript. The motion is detected by progressive frame difference triggering several oscillators square wave forms. I am using VIDA is a library that adds camera (or video) based motion detection and blob tracking functionality to p5js. Mind blowing that it runs in the browser.

Experiment with ML5: Hand Detection

Can I map the detected landmarks of a hand from the video to draw simple geometries on a canvas and suggest the presence of a hand? Part 2 of the computer vision adventures in p5 and Media Pipe/ML5. It blows my mind that this is running in the browser! Have fun!!!

PS: I takes 20 seconds to load the api.

#cv #handdetection #p5 #javascript

Experiment with ML5: Face Detection

A text is writen when a smile is detected.

Nose Tracking with Simple Noise |

This app tracks the nose doing face detection with machine learning. The position of the ‘nose’ in the frame trigger oscillators sounds when inside the hot spots areas. Very simple interactivity but coded from scratch detecting complex human features with Java Script and all running in the browser.

I am… |

Face tracking using ML5 and mapping a mask responsive to size changes. All in Java Script.

Day of the Dead!

Celebration with a digital mask!